(Inside Science) — On July 16 this year, on what marks the 75th anniversary of the first nuclear bomb test, a patient may go to the doctor for a heart scan. A student may open her textbook to study the complex chemical pathways green plants use to turn carbon dioxide in the air into sugar. A curious grandmother may spit into a vial for a genetic ancestry test and an avid angler may wake up to a beautiful morning and decide to fish at one of his favorite lakes.

If any of these people were asked to think about this selection of activities from their days, it would likely strike them as totally unrelated to the rising of a mushroom cloud above the New Mexico desert three-quarters of a century ago. But each item from the list has been touched by that event.

The device that was detonated at dawn on that fateful day unleashed the energy of around 20,000 tons of TNT from a plutonium core roughly the size of a baseball. It obliterated the steel tower on which it stood, melted the sandy soil below into a greenish glass — and launched the atomic age.

To reach this milestone, the U.S. government had marshaled masses of people and spent billions in an effort dubbed the Manhattan Project after the borough in New York where it was first based. Some of the impact of this wartime project, such as the nuclear arms race, still looms large in our public consciousness. But other impacts have faded from view for most of us.

The scan, the textbook, the genetic test and the favorite lakeside retreat represent elements of the Manhattan Project’s forgotten legacy. They are connected through a type of atom called an isotope, which was deployed in scientific labs and hospitals before World War II, but whose overwhelming prevalence in the decades after the war was enabled and pushed by the government apparatus that was a direct heir of the effort to build the bomb.

“Generally when both ordinary people and scholars have thought about the legacy of the Manhattan Project, we thought about the way in which physics and engineering were put to military use,” said Angela Creager, a science historian at Princeton University whose book “Life Atomic” chronicles the history of isotopes in the decades after WWII. “Part of what I discovered was that atomic energy had just as much of a legacy in some of the fields that we think of as peaceable as it did in military uses. … A lot of the postwar advances in biology and medicine that have really been taken for granted owe a lot to the materials and policies that were part of the Cold War U.S.”

Same Chemistry, Different Physics

Isotopes were discovered in the early 20th century, during a period of remarkable progress in our understanding of matter. Scientists had verified the existence of the atom and were figuring out its three main parts — electrons, protons and neutrons — and how they fit together. They eventually worked out that atoms could have the same number of protons, but a different number of neutrons jammed together in their tiny nuclei.

These variants, called isotopes, are different flavors of the same element. Scientific shorthand picks letters to designate the element — C for carbon, for example — and a number to indicate the number of protons plus neutrons. C-14 for example, is used for carbon with 6 protons and 8 neutrons, while C-12 is used for the more common form of carbon that has 6 protons and 6 neutrons.

Some isotopes are stable, existing for eons, while others are unstable, or radioactive. These so-called radioisotopes eventually decay into some other element or isotope, emitting radiation in the form of a particle or an energetic gamma ray in the process.

Before WWII, isotopes could be separated from natural substances, or they could be artificially generated by smashing accelerated charged particles from a machine called a cyclotron into a target material.

“What’s amazing about isotopes is that they are physically detectable, but chemically identical,” said Creager. This meant scientists could replace an ordinary atom with an isotope cousin and then track that atom through chemical or biological processes.

In the 1930s, isotopes were deployed by scientists and doctors in a wide range of experiments, but their general scarcity kept the pool of users relatively exclusive.

The frenetic push to build an atomic bomb produced something that could generate much larger quantities of isotopes — a nuclear reactor — and it changed the isotope landscape profoundly.

Uncle Sam’s Isotope Shop

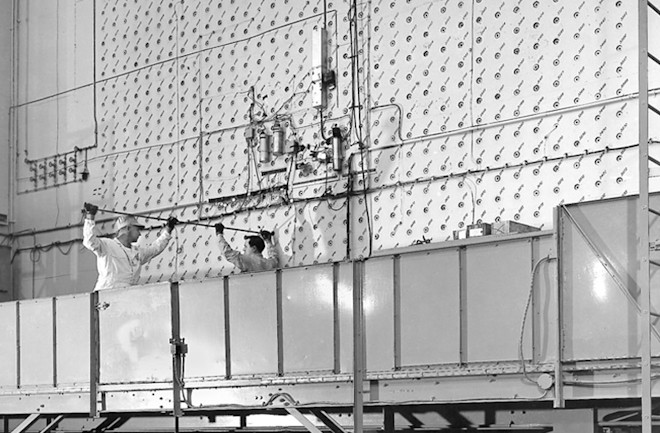

In 1943, the U.S. military built the first industrial-scale nuclear reactor in what became the city of Oak Ridge, Tennessee. It served as a pilot plant for even larger reactors that were ultimately constructed in Hanford, Washington. The reactors’ main role in the war effort was to produce the isotope plutonium-239, which scientists had concluded could form the explosive core of one of the two types of atomic bombs they were designing.

Plutonium-239 was a product of the nuclear chain reactions propagating through the reactors’ fuel slugs, small cylinders of uranium encased in aluminum that were pushed into the face of the reactor. Plutonium could be extracted by chemically processing the slugs after a certain amount of time in the reactor.

But plutonium wasn’t the only isotope the reactors made; other “by-product” isotopes were found in the fuel slugs. The scientists could also make bespoke isotopes by putting materials into the reactor to be bombarded with flying neutrons that, like the charged particles in a cyclotron, could transform the atoms they encountered. Most often these reactor-made isotopes were radioactive.

Even during the war, the Oak Ridge reactor sometimes generated isotopes for nonmilitary use, including radioactive phosphorus-32, which was used in cancer therapy.

After the war, many Manhattan Project scientists argued that the Oak Ridge reactor should start regularly supplying isotopes to scientists and doctors for their research.

At the time, reactors had certain advantages over cyclotrons for this task: They could produce a larger quantity and diversity of isotopes. Plus, scientists were looking to wrest control of nuclear technology from the military by giving it a peaceful application, Creager said.

In 1946, an Isotope Branch of the Manhattan Project was set up in Oak Ridge to oversee requests for isotopes. In June of that year, an article in Science magazine advertised the availability of around 100 different isotopes.

n a few years, the Isotope Branch (renamed the Isotope Division) was not only meeting researchers’ demand for isotopes, it was creating it. The Atomic Energy Commission — the civilian successor to the Manhattan Project — advertised the program, lowered prices on some of the most widely used isotopes to below production cost and offered training to scientists on how to properly handle radioactive materials.

“I don’t think radioisotopes would ever have been as widely used if it weren’t for the promotion of them,” Creager said. “The [Oak Ridge] reactor had been built by the government as part of the Manhattan Project. Nobody who was getting radioisotopes was paying for that infrastructure. And even the production and shipping costs were highly subsidized by the government.”

By 1950, shipments of isotopes from Oak Ridge neared 20,000. They increasingly penetrated fields from medicine to biochemistry.

Isotopes were the first — and for more than 10 years, the only — significant civilian application of nuclear reactors, said Nestor Herran, a science historian at Sorbonne University in Paris. Nuclear agencies often touted them as examples of the beneficial and peaceful side of nuclear technology and for decades national governments — including the U.S.’s main Manhattan Project collaborators Canada and the United Kingdom — were key players in the supply chain.

Alvin Weinberg, the director of Oak Ridge National Laboratory from 1955–1973, famously concluded: “If at some time a heavenly angel should ask what the Laboratory in the hills of East Tennessee did to enlarge man’s life and make it better, I daresay the production of radioisotopes for scientific research and medical treatment will surely rate as a candidate for first place.”

A New Way of Seeing

On Aug. 12, 1945, less than a week after the bombings of Hiroshima and Nagasaki, the Dallas Morning News ran a political cartoon showing cancer, personified as a human skeleton, running from powerful rays of atomic energy.

Although the cartoon may look naive to modern eyes, it shows the optimism at the time that new nuclear technologies could be repurposed to give doctors powerful new tools to fight disease. In 1948, the Atomic Energy Commission launched a program offering isotopes essentially for free for cancer diagnosis, therapy and research. Recipients only had to pay for shipping.

At first, it was thought that radioactive isotopes might attack cancer by concentrating in certain parts of the body and irradiating tumors from the inside. In general, this approach did not work as well as hoped. In the 1950s, doctors turned more to the isotopes cobalt-60 and cesium-137, which provided external sources of radiation for cancer treatment. Other shorter-lived isotopes became important tools in diagnostic imaging to reveal patients’ internal anatomy. Today, doctors around the world perform around 40 million diagnostic procedures per year with the most widely used medical isotope: technetium-99.

Nuclear medicine is likely isotopes’ most noticeable application to our peacetime lives, Herran said.

When used in diagnostic tests, isotopes show doctors hidden structures inside the body. More generally, the power of isotopes to reveal the unseen is perhaps their most enduring legacy, and it wasn’t just doctors who took advantage of this power.

Isotopes can act as tiny atomic beacons that can be tracked through time when added to a system such as a cell, a whole organism or even an entire planet’s atmosphere.

Both stable and radioactive isotopes can serve as tracers, but at the end of WWII radioactive isotopes had some distinct advantages for large-scale use, and not only because governments had built tools that could produce them in large quantities.

Scientists could detect radioisotopes with relatively simple equipment like Geiger counters or X-ray film. “Had there only been stable isotopes available, the degree of expertise and the instrumentation needed would have probably restricted isotope usage to a smaller set of scientists,” Creager said.

At the end of the war, isotopes — both stable and radioactive — were the only tool scientists had to track individual atoms and molecules through chemical transformations. Researchers increasingly put them to use prying open some of nature’s black boxes.

One black box at the time was photosynthesis — the process plants use to convert sunlight, air and water into sugar. It was singled out as a question ripe for unravelling with reactor-produced isotopes as early as 1944, in a report by Manhattan Project scientists on the potential postwar uses of the government facilities.

In 1945, the chemist Melvin Calvin from the University of California, Berkeley began studying photosynthesis using the carbon-14 produced in the Berkeley cyclotron. Soon after, he began purchasing C-14 from Oak Ridge. His group exposed photosynthesizing green algae to C-14 tagged carbon dioxide. They then killed the algae after varying amounts of time and analyzed the chemical compounds that the plants had produced with the “hot” carbon. By 1958, Calvin and his colleagues, who until 1954 included his main collaborator Andrew Benson, had figured out each step of the complex chemical pathway, now termed the Calvin-Benson cycle, that most green plants use to convert carbon dioxide in the air into carbohydrates. In 1961, Calvin was awarded the Nobel Prize in chemistry for the work.

One of the main contributors to the scientists’ success was their use of paper chromatography to separate various compounds in the algae. When they held the paper up to medical X-ray film, spots formed above the compounds that had incorporated the radioactive carbon.

The physicist Freeman Dyson enthused about the technique in a letter to family after he attended a talk by Calvin in 1948: “The long-sighted people said, when nuclear energy first came on the scene, that the application to biological research would be more important than the application to power. But I doubt if anyone expected that things would actually get going as fast as they have.”

The Atomic Energy Commission estimated that the Oak Ridge reactor could produce the same quantity of radioactive carbon-14 it would take a thousand cyclotrons to make, and that the reactor could do it for about one-ten-thousandth the price.

The Dangers of Radioisotopes

In the 1940s, scientists mostly thought about radiation dangers in terms of acute effects, such as radiation burns or radiation poisoning from high levels of exposure. It was only in the 1950s and ’60s that a more thorough understanding of the potential for low-level radiation to cause long-term harm through genetic mutations emerged.

Consequently, in the early days of radioisotope tracer research, scientists were often cavalier about the dangers of radioactivity in the lab relative to later safety standards, Creager said. Similarly, early medical uses of isotopes sometimes violated what would today be considered essential ethical principles. A particularly sad example, Creager said, is a study that gave radioiron to pregnant women to track how it was absorbed. The researchers used radioiron from reactors, rather than cyclotrons, even though the reactor-produced isotopes contained a longer-lived iron isotope that ultimately posed bigger health dangers. While the scientists who conducted the original study did not think that radioactive iron posed any risk to the fetuses, a later study found that the women’s children had a small but statistically significant increase in the instances of childhood cancer. In fact, this study contributed to an emerging awareness that fetuses are especially susceptible to damage from radiation.

As knowledge about the risks of radioisotopes increased, so did regulation. Diagnostic uses of radioisotopes today generally expose patients to much lower amounts of radiation than in the early days and therapeutic uses more precisely target the tissue being treated.

Similarly, the development of better radiation detection equipment and the commercial production of radio-labeled compounds also reduced the amount of radiation exposure scientists would typically get from conducting a radioisotope tracer experiment in the lab.

Overall, when radioisotope tracers are handled correctly, the levels of radiation they generate are low compared to background radiation. On the medical side, many doctors and patients conclude that the health benefits they get from diagnostic nuclear medicine outweigh the risks.

Still, it’s important to stay mindful about even low-dose exposures.

“You have to ask, ‘What risk is worth taking, and who’s benefitting from the risk?’” Creager said.

Life From an Atom’s Point of View

Calvin and Benson’s photosynthesis work was at the vanguard of what become a flood of research using isotopes to uncover how life functions at the molecular level.

While C-14 experiments revealed a mind-boggling multitude of metabolic pathways in living organisms, radioactive phosphorus-32 allowed researchers to probe the properties of DNA. In 1952, Alfred Hershey and Martha Chase used phosphorus-32 from Oak Ridge in part of their work to show that DNA, rather than protein, is the stuff that makes up genes. Although Hershey and Chase were not the first to perform experiments that indicated DNA was the carrier of hereditary information, theirs were the ones that convinced most of the scientific community. Hershey was later a joint recipient of the 1969 Nobel Prize in Physiology or Medicine.

In the following decades, radioisotopes became essential tools in genetics research, as scientists went on to discover how DNA serves as a recipe for making proteins, how humans and animals share huge swaths of genetic code, how certain diseases are linked to genetic mutations, and much more.

“Radioisotopes had a massive impact in biology,” said Allan Spradling, a developmental biologist at the Carnegie Institution for Science in Baltimore, Maryland. “They were one of the key tools to go from an abstract view of genetics to actual molecules and specific processes in the cells. It’s hard to think of [a discovery] that would be based just on radioisotope techniques, but a vast amount of our knowledge of biology has at least a good strong contribution from them.”

In addition to studies of genetics and metabolism, scientists also tracked nutrients and hormones through the human body, elucidating the roles of these vital atoms and molecules.

Isotopes’ power to probe molecular processes didn’t stop at the boundaries of living organisms either. In the field of radioecology, which grew substantially after WWII, researchers studied how atoms move through entire ecosystems.

In the mid-1940s, the ecologist G. Evelyn Hutchinson, an early adopter of isotope techniques, released trace amounts of radioactive phosphorus-32 into the surface waters of Linsley Pond in Connecticut to study how the nutrient cycled through the water, mud, algae and plants over the course of a few weeks. He started the experiments with radioactive phosphorus from the Yale cyclotron, but continued later with shipments from Oak Ridge. The reliability and quantity of the reactor-produced isotopes allowed him to gather better data.

The experiments revealed how algae quickly took up the added phosphorus. The algae grew and often were quickly eaten by tiny animals called zooplankton. When the algae and zooplankton died, they sank to the bottom of the pond, taking some of the phosphorus with them. Seasonal temperature changes and winds could stir up the water column and bring the nutrient back to the surface, completing the cycle. One implication of the findings was that too much phosphorus might throw the whole system out of balance, leading to harmful blooms of toxic algae and photosynthesizing organisms called cyanobacteria.

Other ecologists studied how isotopes released into the environment during nuclear weapons production and testing traveled through the environment. One particularly influential finding, based largely on studies near the Hanford reactors in Washington state, revealed how radioactive contaminants could concentrate in animals and plants.

The ecologist Eugene Odum summarized the implications in his book “Fundamentals of Ecology”: “We could give ‘nature’ an apparently innocuous amount of radioactivity and have her give it back to us in a lethal package.” This concept of bioaccumulation was later applied to other pollutants such as chemical pesticides.

“In my view, the use of radoisotopes made it possible to measure many kinds of rates of different ecological processes. P-32 and C-14 in particular were early breakthroughs. But stable isotopes have also made a big impact on the scale of research that can be done in the field,” said Alan Covich, an ecologist at the University of Georgia in Athens.

Science historian Herran pointed out that ecologists’ use of isotopes in the 1950s helped cement a view of nature as a series of networks that could be described by the flow of energy and materials.

The Legacy Continues

The story of how society shapes science — and how science shapes society — contains plenty of twists. Shortly after Calvin reported his eponymous cycle, an article in the Christian Century went so far as to predict that the discovery would lead to “a vast increase in the world’s food supply within the next year or so.”

It didn’t work out that way. However, recently, scientists have achieved some preliminary successes tweaking photosynthesis to increase crop yields, an effort that has taken on increasing importance as plants face stresses from a warming world, said Krishna Niyogi, a plant biologist at the University of California, Berkeley. The scientists use tools, such as high-powered computer modeling and genetic engineering, that didn’t exist until recent decades.

Genetics research has exploded with applications to everyday life. Scientists and commentators in the mid-century never could have imagined today’s breadth of knowledge in molecular biology, Spradling said. While it’s impossible to single out any one technique as responsible for applications such as genetic testing, the whole field owes a debt of gratitude to radioisotopes, he said.

In other ways, history, as they say, repeats itself. Knowledge about the way phosphorus in ponds and lakes can cause algae blooms and fish die-offs helped lead to actions in the 1960s and ’70s to limit phosphorus from sources such as laundry detergents, Covich said. W.T. Edmondson, a former student of Hutchinson’s, campaigned to clean up Lake Washington, near Seattle, by diverting sewage, another big source of phosphorus. The water quality and health of the fish populations vastly improved. “But often people fall back and forget the basic science that concluded control of all phosphorus sources is still needed,” Covich said. “Now we are getting more toxic cyanobacteria as the phosphorus from sewage treatment plants and climate warming are creating another form of toxic brew."

It is hard to predict how the scientific chain reactions touched by the Manhattan Project’s isotopes program will propagate through the next 75 years. But looking back, many scientists and historians agree that the effects to date have been profound.

“I basically came to the conclusion, in the course of researching my book, that radioisotopes would never have had the impact that they ended up having if not for World War II, which fast-tracked the development of nuclear technology on a massive scale,” Creager noted.

Radioisotopes stretch the Manhattan Project’s legacy into realms of everyday life we don’t often connect to the A-bomb. They show how physics and engineering link to biology and medicine, how science links to policy, and how an ordinary person’s ordinary day links to the weight of human history. In that sense, these atomic tracers reveal not just how organic molecules transform or how blood flows through a patient’s heart; they reveal the sinews of society itself.

Acknowledgement: The author would like to credit Angela Creager's book "Life Atomic" for providing a broad overview of the topic, as well as many specific details and written quotes mentioned in this story.

Catherine Meyers is a deputy editor for Inside Science. This story originally appeared in Inside Science. Click here to read the original story.