Advancements in robotics technology are forcing us to change our perception of what a robot is. From smart cars to interactive Segways, more powerful computer programs are giving machines the ability to act alongside us, rather than simply for us.

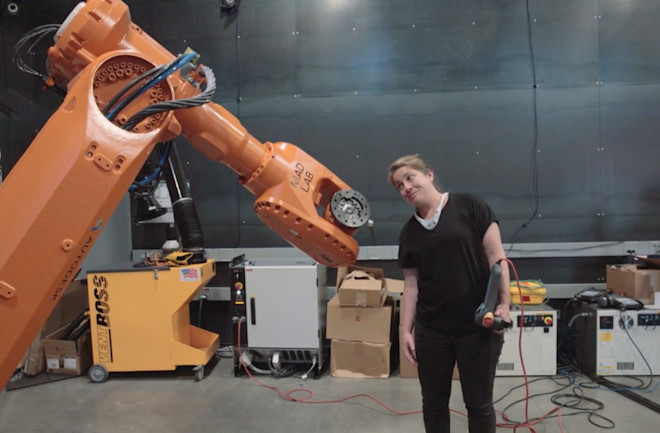

Madeline Gannon, whose research studio Madlab focuses on human-computer interactions, is using new programs to give one of the most basic robots the ability to transcend the boundaries of its creation.

By combining innovative software with motion capture technology, she’s reinvented the robotic arm — the workhorse on assembly lines. Gannon designed software that allows a robotic arm to observe and parrot human movements. She envisions robots less as servants and more as collaborators, and her work is a big step toward that goal. Instead of performing repetitive, point-by-point tasks, Gannon wants robots to use information from their movement to build a logical framework for understanding and anticipating our actions.

Discover spoke with Gannon about the limitations of current robots, teaching robots new tricks, and being a robotic artist.

Discover: You originally started studying architecture?

Gannon: I’m doing my PhD in computational design at Carnegie Mellon University, and it’s housed in the School of Architecture. It’s basically anything that has to do with design and anything that has to do with a computer. So I’ve been working for many years now on inventing better ways to communicate with machines that can make things. And industrial robots are some of the most incredible machines for making things with. They’re so adaptable, and so robust that it’s just really fantastic to work with them.

But the problem is, is that they’re very hard to use and they’re very dangerous to use. So that’s sort of what led me to develop this control software that’s just a little more intuitive and that helps keep you safe when you’re working in really close quarter contact with this machine.

Are there many parallels to architecture in how you design a machine?

G: I guess the way that I work is largely trans-disciplinary. So I’m playing a computer scientist and a roboticist, but the questions that I ask with this technology are really informed by architecture. So Quipt is really about how a person and kinetic objects are interacting in space.

A lot of what I’m trying to get it to do is to act like how people would work together in space. And I think that’s just a completely different approach than if you were working inside a robotics department, where they might be doing path planning and optimization.

It seems like Quipt and the Robo.op database you created are kind of the breakthroughs. Are you still working on them?

G: Quipt grew out of Robo.op as an open-source way of communicating with these machines that’s a little easier than their usual workflow. Usually when you create a program for an industrial robot, you either have to teach it points by using a joystick to move it into place and record that point and move it to a new place and record that point and you build up the motion that way.

That’s very iterative and it takes a lot of training to get that right. And then at the same time, those programs usually run on a robot for a long period of time, the same simple task over and over, 24 hours a day, 7 days a week. And it doesn’t know anything else; it’s just the task that has been programmed on it.

You mentioned that your library is open source. Have you seen many outside people work with you?

G: There’s been some interest. One of the challenges of working with industrial robots is that every brand of robots has their own programming language, so the only people that can work with me on this project have to own the same brand robot, which is a serious limitation and very unfortunate.

What are you working on right now?

G: I’m working on developing Quipt a little further. The video you wrote about earlier, that’s really looking at a basic proof-of-concept idea that we can program some spatial behaviors into a robot so it can work with us as if it were another human on a task. So what I’m working on now is developing task-specific behaviors.

So, the first thing is being able to put a film camera on the end of the robot, and record different camera moves for someone like a director. So I’m building out different ways that a director would work with a camera operator as a person and figuring out the best way to communicate in the same way that is native to how this person practices their craft to communicate to the robot in a very similar manner.

You talk about robot helpers as an extension of ourselves. Is this software aimed at using robots as tools or could it be applied to robots that think and move for themselves in the future?

G: I like the idea of the robot as a collaborator. And there could be some kind of hierarchy there where maybe it’s an apprentice or a helper.

There’s enough access to technology now that we can understand how a person completes a task in a shared space with a robot, and we should be able to codify that task in a way that the robot doesn’t need to mimic them, but it can know and help out in completing that task.

Where else do you see your technology being implemented?

G: I’m really excited for when you take this machine out of a static controlled setting like a factory and into live, dynamic environments. Another scenario would be on a construction site.

There’s already a level of danger involved and people are aware of their environments. But you can bring this robot and have it help a mason move larger amounts of materials quicker, or help a plumber bend a pipe.

These machines are often used in factory settings for spot-welding chassis. You could bring this robot onto a construction site for the same purpose as in a factory, but it will need an awareness of the people around it and the environment around it.

What are some of the biggest challenges you had to overcome while creating this technology?

G: I’m asking this machine to do exactly what its designed not to do. So that was about the biggest challenge to overcome. The motion capture system is really about capturing high-fidelity movement with sub-millimeter precision, while the robot is really about repeating a predefined static task over and over again.

So it’s not generally used for live control, or for being able to change its mind about where it’s going next. So that was a big hurdle to try to overcome, to get the machine to respond quickly to what I’m doing.

It kind of sounds like the robot has to learn.

G: Yeah, I don’t have any machine-learning algorithms implemented yet in here, but I think that that is the direction that development is going to go towards. You can imagine, if you are a master craftsman and you are working with an apprentice, over time the apprentice learns the nuances and body gestures of the master, and they become a better team as they work longer together.

I envision that we can build those kinds of behaviors between a person and an industrial robot.

Where do you see human-robot interactions going, say, within the next ten years?

G: It seems that there’s a division into two camps. One camp is sort of artificial intelligence, where these machines are teaching themselves how to do automation tasks, so sort of replacing and optimizing human labor.

And then the other camp is complementary but perhaps opposite, where its just finding a better way to interface these machines with people and to extend and expand and augment our abilities with these same machines instead of replacing us.

I really liked the artwork on your website, where the robot would trace a pattern you drew on your skin and then recreate it. Is that something you’re still working on?

G: Yeah, that’s something that definitely still in production. The original product, Tactum, lets you design and customize 3-D models directly on your body that can then get sent to a 3-D printer. And because the underlying model is based on the form of your body, it will automatically fit you.

One of the motivations behind building this software so we can work safely with industrial robots, is that I’d like to be able to design on my body and have the robot fabricate and 3-D print on my body instead of having to send that away to another machine — sort of closing the loop there between design and fabrication.

Are there any collaborators outside of the world of robotics that you’d like to bring into your work?

G: The development of Quipt was sponsored by Autodesk and Pier 9, and they’ve been incredibly supportive of my research and my work and our collaboration sponsors. It’s interesting, Google is now getting into the space of industrial robotics, and I believe Apple is as well.

It’s an interesting time to be working with these machines — they’ve been around for around half a century, but they’re only now being explored outside of manufacturing settings. And that to me is really exciting. I’m happy that bigger companies and industries are seeing the potential and bringing these things out into the wild.