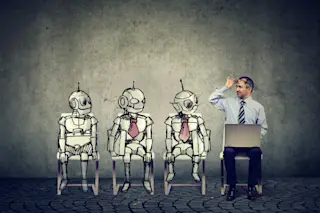

So far, most people encounter robots in the pages of science fiction stories. (Not counting the ones that can deliver food on college campuses or make you a latte.) But in the not-too-distant future, interacting with robots will become even more common in the real world. When that happens, robots will need the social skills to properly interact with humans.

Still, surprisingly little work has been done to make sure robots can socialize, even on a basic level. But a team of MIT researchers has taken up the challenge: The scientists developed a framework for robotics that incorporates social interactions, enabling the machines to understand how we help — or hinder — our fellow humans.

“Robots will live in our world soon enough, and they really need to learn how to communicate with us on human terms," says Boris Katz, head of the InfoLab Group at MIT’s Computer Science and Artificial Intelligence Laboratory and one of the study authors, in a press release. "They need to understand when it is time for them to help and when it is time for them to see what they can do to prevent something from happening."

Simulated Socializing

Within the scientists' new framework, they were able to define three types of robots: Level 0 robots have only physical goals and are unable to reason socially. Level 1 robots, by contrast, have both physical and social goals, but assume that other robots have only physical goals. And level 2 robots have physical and social goals, and also assume that other robots have both, as well. The scientists speculate that these higher-level bots are the ones that already work well with other robots — and could potentially work well with humans, too.

To test this framework, the researchers created a simulated environment where one robot watches another, makes a guess about the other robot’s goals, then either chooses to help or hinders the fellow robot depending on its own goals.

The team created 98 different scenarios in which virtual robotic agents helped or hindered each other based on guesses about the other robot’s goals, then meshed those goals with their own. Later, when humans watched videos of the robots interacting — depicted as a series of computer animations — their predictions of the robots’ goals largely matched the predictions the robots made about each other.

Katz says this experiment is a small but important step toward teaching robots to recognize the goals of humans and engage with humans. "This is very early work and we are barely scratching the surface," says Katz in the press release, "but I feel like this is the first very serious attempt for understanding what it means for humans and machines to interact socially."

A Kinder AI?

In addition to training robots, this research may eventually have uses beyond artificial intelligence. Andrei Barbu, one of the study authors and research scientist at MIT’s Center for Brains, Minds, and Machines, points out that not being able to quantify social interactions creates problems in many areas of science.

For example, unlike the ease with which we can accurately measure blood pressure or cholesterol, there is no quantitative way of determining a patient’s level of depression or assessing precisely where a patient lies on the autism spectrum. But because, at least to some degree, both depression and autism involve social impairment, computational models like this one could provide objective benchmarks for evaluating human social performance and may make it easier to develop and test drugs for these conditions, Barbu says.

A well-documented problem in training artificial intelligence is that training models by simply giving them huge amounts of data results in very powerful artificial intelligence — but it also tends to produce AI that's sexist and racist. The MIT team thinks their approach might be a way to avoid that problem. “Children don’t come away from their families with the idea that the skin color of their parents is superior to everyone else’s just because 95 percent of their data comes from people with that skin color,” says Barbu, “but that’s exactly what these large-scale models conclude.”

By learning to estimate the goals and needs of others, rather than simply making associations and inferences based on tremendous amounts of data, this new method of training robots might eventually create AI that is much more aligned with human interests. In other words, it might create kinder AI.

Meanwhile, the team is planning to make the simulated environments more realistic, including some that would allow the robots to manipulate household objects. One day, when a robot is your co-worker, your house cleaner, and possibly your caregiver, you may want it to be able to relate to you as a person. This research is a first step in helping machines do just that.