HAL 9000, depicted as a glowing red "eye," was the frighteningly charismatic computer protagonist in Stanley Kubrick's 1968 movie "2001 Space Odyssey." (Credit: Screengrab from YouTube If you’ve ever tried to hold a conversation with a chatbot like CleverBot, you know how quickly the conversation turns to nonsense, no matter how hard you try to keep it together. But now, a research team led by Bruno Golosio, assistant professor of applied physics at Università di Sassari in Italy, has taken a significant step toward improving human-to-computer conversation. Golosio and colleagues built an artificial neural network, called ANNABELL, that aims to emulate the large-scale structure of human working memory in the brain — and its ability to hold a conversation is eerily human-like.

Natural Language Processing

Researchers have been trying to design software that can make sense of human language, and respond coherently, since the 1940s. The field is known as natural language processing (NLP), and although amateurs and professionals enter their best NLP programs into competitions every year, the past seven decades still haven’t produced a single NLP program that allows computers to consistently fool questioners into thinking they're human. NLP has attracted a wide variety of approaches over the years, and linguists, computer scientists and cognitive scientists have focused on designing so-called symbolic architectures, or software programs that store units of speech as symbols. It’s an approach that requires a lot of top-down management.

A Different School

Another school of thought, the "connectionist approach," holds that it’s more effective to process language via artificial neural networks (ANNs). These computerized systems begin as blank slates, and then they learn to associate certain speech patterns with clusters of interconnected processing units. This open-ended structure enables ANNs to build connections on the fly, with very little direct supervision — in much the same way a human brain does. The crucial distinction between the two approaches is that symbolic architectures require specific rules in order to make decisions, while ANNs aren’t as beholden to rigid structures. Instead of checking whether an answer is right or wrong, ANNs choose the answer that’s most likely to be right. And when it comes to natural language processing, this approach is much more versatile, and better at crafting human-sounding answers.

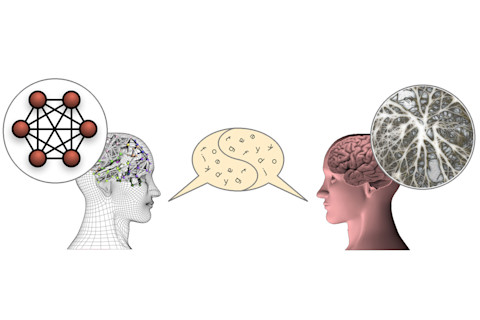

ANNABELL is a cognitive architecture model made up of artificial neurons, which learn to communicate using human language starting from a blank slate. (Credit: Bruno Golosio) Inspired the successes of earlier ANNs, Golosio and his team engineered a brand-new type of ANN known as ANNABELL (Artificial Neural Network with Adaptive Behavior Exploited for Language Learning). The team designed ANNABELL to be able to pick up language by building a system of interconnected associations from scratch, in the same way a human infant does. To give ANNABELL the right tools for the job, Golosio’s team designed their network around a very specific model of human-style memory.

A Working Model

Memory is generally divided into short-term and long-term storage. Short-term memories are easy to retrieve and easy to lose, while long-term memories take longer to form, but stick around. Many researchers also add a third category, working memory, which is sometimes described as your “memory of the present moment.” Have you ever asked someone, “What was that you just said?” and started to repeat the part of the sentence you caught -- only to realize with surprise that you somehow remembered the whole sentence, and could repeat it verbatim? That’s your working memory system in action. And as Golosio and his team knew, an ANN designed around a multi-component working memory model could be a powerful tool for processing and creating human-like communication. “For example, if someone asks you, ‘what is your favorite movie?’” Golosio explains, “you can focus your attention on the word ‘movie,’ and use that word as a cue for retrieving information from long-term memory into working memory, like when you type a keyword into Google.” And similar to using Google, each of the “search results” in your brain’s working memory contains links to stashes of more detailed information about the topic. Golosio’s team hoped to emulate this search-and-link functionality in an ANN, which was an approach, they hoped, might take ANNABELL to a new level of human-likeness.

Correct and Coherent

ANNABELL’s building blocks are artificial neurons simulated inside a powerful computer. Instead of trying to simulate the millions of chemical interactions that go on inside a real neuron every second, the computer simply calculates the likelihood that each neuron will fire, based on the inputs it receives from the other simulated neurons in the network. As in a biological brain, digital neurons that fire together wire together; and that ability to fine-tune the strength of neural connections (and thus, the likelihood that a certain neuron’s firing will trigger certain other neurons to fire) gives ANNABELL the power to learn new associations.

(Credit: Golden Shrimp/Shutterstock) So far, that description fits any neural network, but Golosio and his team took ANNABELL a step further. They structured ANNABELL’s large-scale neural connectivity in a way that simulates verbal components of human working memory. This means ANNABELL can focus, or “listen,” to groups of words, associate them with other words and phrases, explore possible ways of combining words and receive “rewards” for answering questions correctly. Once ANNABELL’s neural structure was in place, Golosio and the team fed the system huge databases of words and sentences: descriptions of relationships between people, between parts of the body and between animals and their categories. They also included sample dialogues between a mother and child and a text-based virtual house.

Making Small Talk

Then researchers asked ANNABELL questions about what she’d learned, and the results were striking. ANNABELL correctly answered 82.4 percent of questions related to the people dataset, 85.3 percent of those related to the parts of the body dataset, and 95.3 percent of those related to the categorization dataset. What’s more, in natural conversation, ANNABELL comes across as remarkably human-like, especially when compared with other current-generation NLP software. The results appeared Wednesday in the journal PLOS ONE. While even ANNABELL is still a long way away from passing for human, the system serves as proof-of-concept for an intriguing idea: that it’s possible to start from a blank slate, and teach a computer to have coherent conversations about potentially unlimited topics. In the immediate future, Golosio and his team plan to upload ANNABELL into a robot, which can experience the world firsthand, and learn to communicate about those experiences. That may mean tomorrow’s generation of chatbots will be not only coherent, but able to talk about experiences they’ve actually had in the real world.