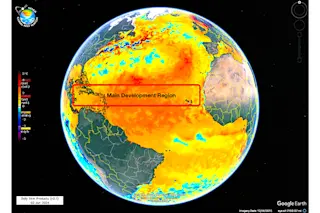

Oceanographers have mapped the seafloor and tracked endangered marine species for decades using autonomous underwater vehicles, or AUVs. But the ocean is a huge, constantly moving three-dimensional ecosystem, and obtaining precise, real-time observations of marine life and of oceanic conditions such as temperature, current and salinity is quite challenging and astronomically expensive.

“Because of this, it has not been possible to get large-scale bulk phenomena over large spatial and temporal domains. You can’t do it with a ship,” says Kanna Rajan, an engineer at the Monterey Bay Aquarium Research Institute who studies artificial intelligence capable of making decisions. That’s why he wants to do it with robots.

When Rajan met Joao Sousa, an engineer at the University of Porto in Portugal, an idea for how to study the oceans in real-time 3-D was born. Sousa had just given a lecture suggesting that to really study the oceans, you need a large network of robots working together seamlessly to gather data. Sousa had the infrastructure to connect autonomous machines together, but he needed a smart robot from which to build his fleet. And Rajan knew he could program a robot smart enough for the job. The two quickly agreed to collaborate.

Underwater autonomous vehicles, or AUVs, will be paired with flying drones to study the seas in real time. | M. Oliveira and J. Tasso, University of Porto

Sousa already had a fleet of small, torpedo-shaped underwater robots that he used to explore shallow coastal areas off Portugal. Each was equipped with sensors that can measure conductivity, temperature and depth. Rajan installed software allowing the robots to make decisions autonomously. The software gives the vehicles “smarts,” he says. “Instead of saying, ‘Turn right and dive,’ you tell it, ‘I want you to do a survey of this area.’ ” It’s like a Roomba in the open ocean: You just turn it on, set it loose, and it dives, collects measurements, routes itself and returns before its battery runs out.

Last summer, the pair put their ideas to the test in a trial run off the southern coast of Portugal, to prove that this network of robots could follow a moving target. From the stern of a Portuguese Navy ship, the two scientists and their teams launched several AUVs into the water. Then they threw over a cylindrical drifter buoy (the target) that broadcasts its position to the AUVs and serves as a mobile hotspot, allowing the underwater vehicles to communicate with the aerial ones and the support vessel.

The third element was an eye in the sky: an off-the-shelf fixed-wing aircraft about 6 feet long with a GoPro camera mounted slightly behind its nose. After the aerial drone spotted the buoy, the underwater and surface vehicles converged on that area to measure the surrounding ocean environment: current direction, speed, strength, temperature and water density.

In May, the crew will take the three-dimensional experiment to the next level. Instead of a floating buoy, the flying drone and the underwater vehicles will track a fish called Mola mola, or ocean sunfish — a creature that can weigh up to 5,000 pounds and has a bad habit of getting caught in commercial tuna pens. Marine biologists working with Rajan and Sousa will fit 30 of these gentle giants with GPS tags, then send out the drone and underwater vehicles to pursue them for several days to learn more about their life cycle and feeding habits.

As the duo’s marine technology and software improve, they hope to make the underwater, surface and aerial vehicles truly autonomous, capable of both communicating with each other and making their own decisions, says Rajan. Then, it will for the first time be possible to investigate large-scale phenomena such as ocean fronts, eddies and blooms, as well as to study movements and environments of marine animals that dive to depths no human could withstand.

“The step after that is, we set out an ensemble of vehicles and tell them, ‘You go figure it out."

[This story originally appeared in print as "Eyes in the Sky — and the Sea"]